Abstract

Artificial Intelligence tools have become increasingly accessible to the public, allowing users to create illustrations with ease. However, imitating specific art styles and creating stylistically consistent and controllable images remains a challenge. This paper explores some methods for improving stylistic control and consistency, and whether artists can use the results of these methods in their workflows. For this we used illustrations kindly provided by children's book illustrator Betina Gotzen-Beek as well as her feedback. Stable Diffusion was used to generate images based on user input text prompts. The generation of these images can be further tuned to fit a specific style by using Low Rank Adaptation. Finally, to gain more control over the generated images, we used ControlNet, an extension to Stable Diffusion that provides additional control over the generated images. This approach was able to produce satisfying results for a number of images, demonstrating its potential to supplement an artist's workflow. With minor manual adjustments, it could be used in an artist's workflow to speed up the illustration process. However, there are still some limitations, particularly in terms of control over image generation, which may be overcome in the near future as the underlying technologies are further developed.

Introduction

Although artworks generated by Artificial Intelligence (AI) have been in existence for years, the release of tools such as DALL-E 2 in 2022 has significantly increased accessibility, resulting in a surge in popularity. The impact of AI-generated imagery in the art world became apparent when an AI-generated image won first place in the digital arts category of a fine arts competition without the judges knowing it was created by AI. This has sparked outrage among artists, with some claiming that it is “anti-artist” (Palmer, 2022, The New York Times, 2022).

This concern arises from the fact that AI-generated imagery can be indistinguishable from those created by humans, which has led many artists to worry about their job security. Intellectual property lawyer Kate Downing predicts that this will have a significant impact on artists, particularly those creating illustrations and stock images (Observer, 2023). Even high-profile artist Takashi Murakami has expressed concerns about the rise of AI, stating that he “work[s] with a certain kind of fear of one day being replaced” (Randolph, Biffot-Lacut, 2023).

While generating visually striking images may seem easy, integrating them into an artist’s workflow raises questions about maintaining a consistent style and form, which is crucial for effective storytelling. Therefore, the question remains: is AI truly capable of replacing human artists? Could artists use these tools to improve and speed up the illustration process?

This project aims to test the possibilities and limitations of AI-created art. Working with input and artworks from children’s book illustrator Betina Gotzen-Beek, it focuses on recreating her specific art style using AI tools and evaluating whether the generated images could be used by artists.

Technologies

Numerous AI image generation tools are available, each providing varying degrees of control over image generation. DALL-E 2, launched by OpenAI in November 2022, quickly became the first mainstream image generator. Other popular tools include Microsoft Designer’s Image Creator and Google’s ImageFX (Ortiz, 2024).

As each tool has its own specific advantages and drawbacks, some may be better suited to certain tasks than others. For this project we needed a tool that allows as much creative freedom and direction as possible in order to consistently create images in the desired art style. Ideally, it should also be available for free and run locally to avoid the waiting time that the usage of most online tools entails.

This is why we chose Stable Diffusion to use for this project: It offers extensive methods to control image generation like text prompts, various samplers as well customizable image resolution and step count. Additionally, it offers other features like image-to-image generation, inpainting and upscaling. As the code is available on GitHub, there are many users building their own extensions and modifications (Stability AI, 2023).

Stable Diffusion

Stable Diffusion is a latent diffusion model — meaning it takes a text prompt as input and outputs a generated image matching that prompt (Stable Diffusion Web, 2024). The first version was released in 2022, and several updated models have been released since then (Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B., 2022).

For our project, we used the Automatic1111 Web UI, which provides a graphical user interface for many different Stable Diffusion functionalities such as text-to-image and image-to-image (Automatic1111, 2023). It also supports extensions such as Low-Rank Adaptation (LoRA) and ControlNet, both of which we employed in this project to achieve more consistent and controllable results. These concepts will be explained in subsequent sections.

Training Stable Diffusion

In general, diffusion models are designed to generate new data similar to the data they have been trained on — in the case of Stable Diffusion this data refers to images. There are two important processes in a diffusion model: Forward Diffusion and Reverse Diffusion. As this paper focuses on image generation and Stable Diffusion, images will be used as examples.

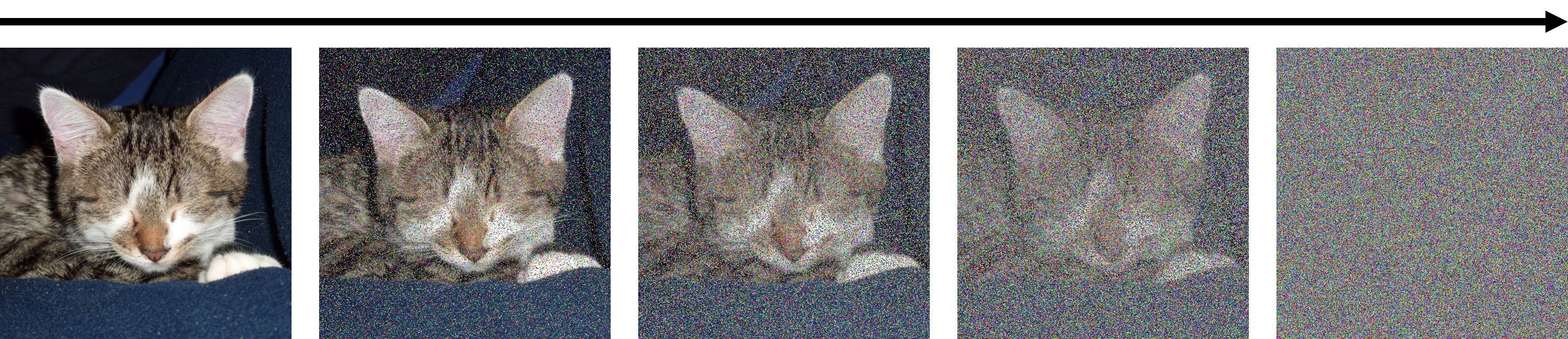

Forward Diffusion

Forward Diffusion takes a training image as input and adds some noise to it. This new image with added noise is then used as input for the next step, where even more noise is added. This is repeated a certain number of times (chosen prior to training) resulting in an image containing random noise in which the original image can no longer be recognised.

Figure 1: forward diffusion of an image of a cat

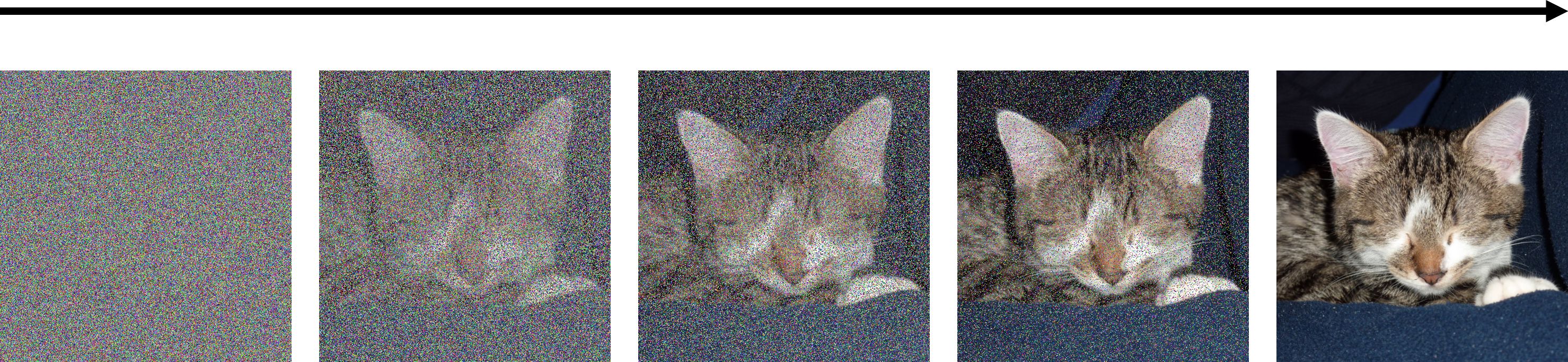

Reverse Diffusion

Reverse Diffusion means the opposite: it takes a random noise image and removes some noise at each step, resulting in a clear and noise-free image.

Figure 2: reverse diffusion resulting in an image of a cat

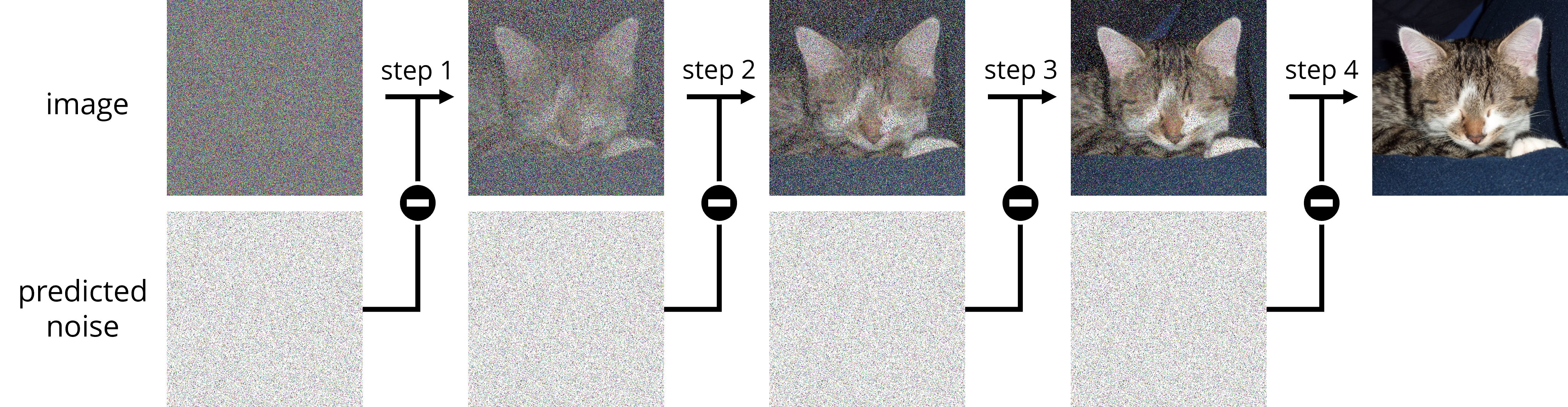

To do this, the model needs to know how much noise to remove per step. A U-Net model (neural network) is used to predict this noise. To train it, training images are first turned into random noise in several steps using Forward Diffusion.

The U-Net model then estimates the amount of noise added at each step. These predictions are then compared to the ground truth — the noise used during Forward Diffusion — and the model’s weights are adjusted accordingly. After training this noise predictor, it is able to transform a noisy image into a clear one by subtracting the predicted noise at each step.

Figure 3: reverse diffusion: subtracting the noise predicted by the U-Net model from the image for each step

Conditioning

Although images can be generated using this technique, it is not possible to influence the type of image that the noise predictor will generate, as it is still unconditioned. To control the generation of images using prompts, the model must be conditioned. The objective is to guide the noise predictor so that the subtraction of the predicted noise produces a specific type of image. For instance, entering the prompt “cat” should only generate images of cats.

Text conditioning involves four components: the text prompt, a tokeniser, embedding and a text transformer. The text prompt is a text entered by the user and processed by a tokeniser. Stable Diffusion v1 uses Open AI’s CLIP tokeniser to convert words comprehensible to humans into tokens, meaning numbers, readable for a computer (Andrew, 2024). Since a tokeniser can only tokenise words that have been used during its training, Stable Diffusion will only recognise these words.

The tokens are then transformed into a 768-value vector called embedding. Each token has its own unique embedding vector, which is determined by the CLIP model. Embeddings group words with similar meanings together. For instance, “guy”, “man”, and “gentleman” have very similar meanings and can be used interchangeably.

The embedding is then passed to a text transformer, which acts as a universal adapter for conditioning and processing the data further.

This output is used multiple times by the noise predictor through the U-Net, which employs a cross-attention mechanism (information passed from encoder to decoder) to establish the relationship between the prompt and the generated image.

Generating images with Stable Diffusion

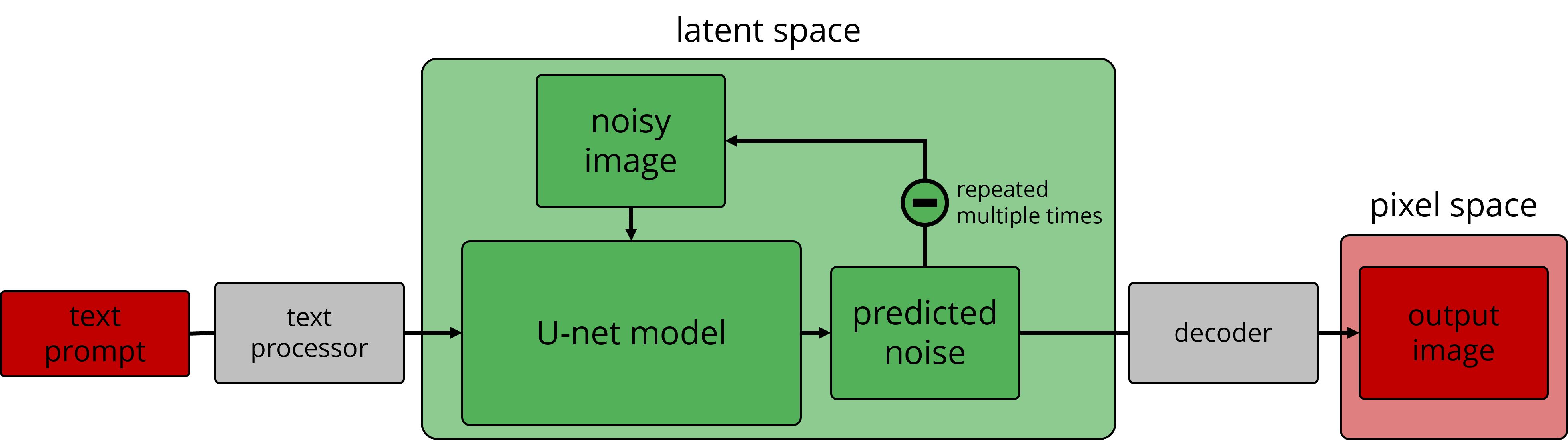

When generating an image using text-to-image, the user’s text prompt input is processed by a text processor. This converts the words into a form comprehensible to the U-Net model.

Stable Diffusion then generates a random noisy image in latent space. This is influenced by the seed, an integer number, which the user provides as input. Using the same seed will generate the same noisy image used in the beginning, which will result in the same image being generated if all other input parameters remain the same.

The U-Net model then takes both the processed user input and the noisy image and calculates the predicted noise based on these inputs. This predicted noise is then subtracted from the original noisy image, resulting in a slightly less noisy image. This resulting image is then used as a new input to the U-Net model, which again predicts noise based on this slightly less noisy image and the text prompt. This process is repeated a number of times called the step count, which is specified beforehand by the user.

Finally, the resulting image is decoded into pixel space, allowing it to be perceived as an actual image by humans. This is the final image output by Stable Diffusion (Andrew, 2024, Rombach, Blattmann, Lorenz, Esser, Ommer, 2021).

Figure 3: Stable Diffusion generates an image by taking a text prompt and a noisy image and predicting the noise to be removed based on that. This process is repeated multiple times and the final result then decoded into pixel space.

Fine-tuning Stable Diffusion

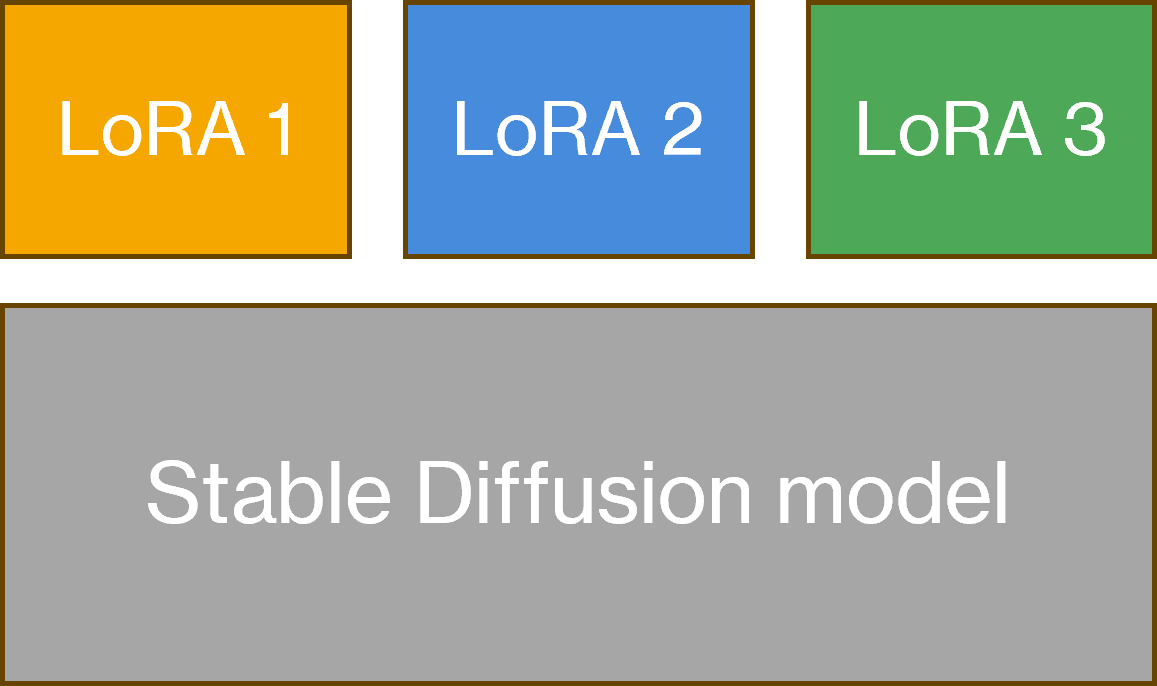

Low-Rank Adaptation

LoRA is a form of fine-tuning that uses some techniques to be much more cost and time-efficient than other methods. It can be used on various Large Language or Diffusion Models, but this text will focus on using LoRA with Diffusion Models and Stable Diffusion.

As mentioned above, LoRAs can be used to create images in a particular style. This can be useful if multiple images in the exact same style are desired or if the user wants to generate images in a style that Stable Diffusion cannot produce on its own.

However, LoRAs can also be trained on a specific person, pose, garment or object as long as there are enough images to use during training (Andrew, 2023).

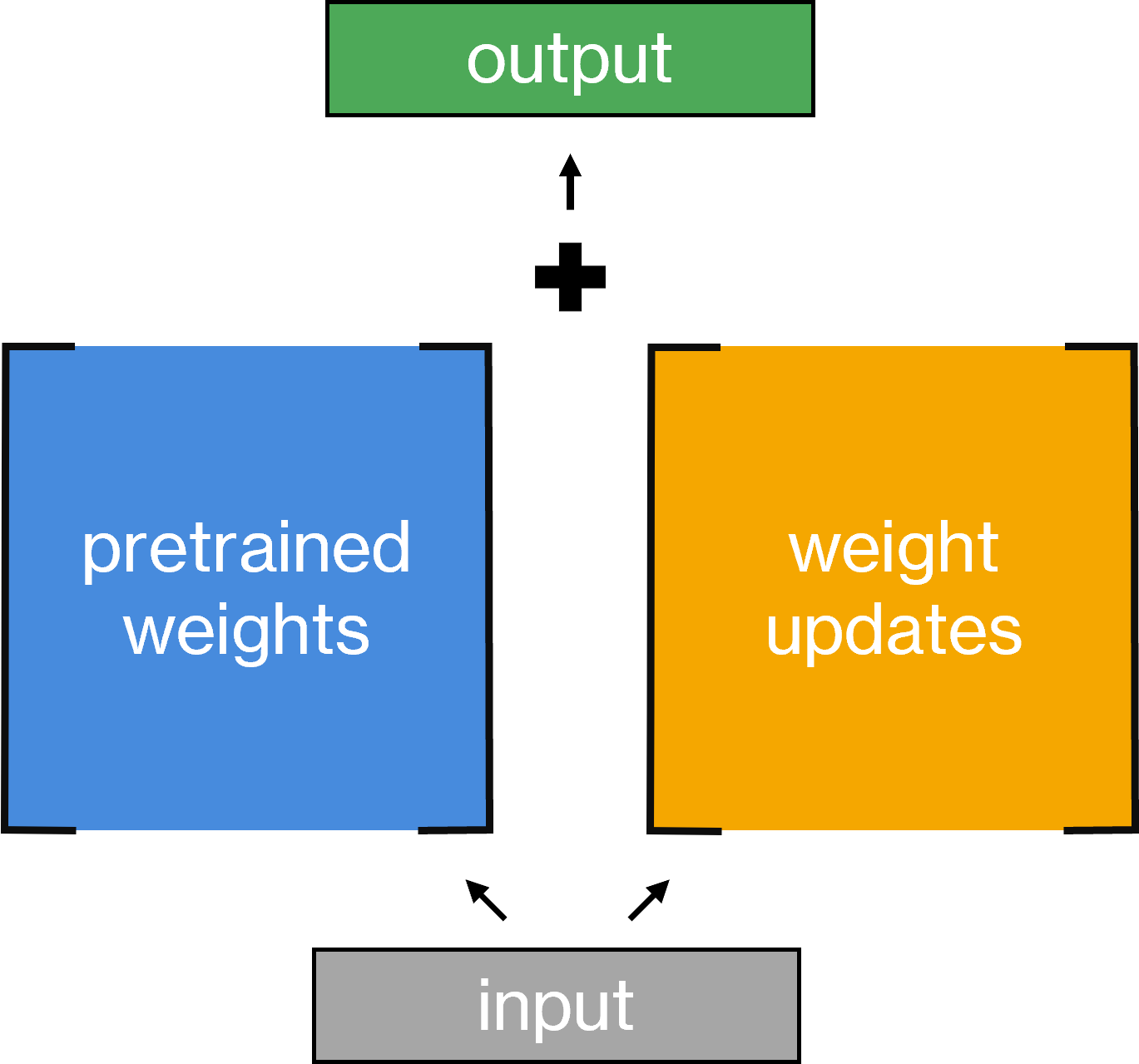

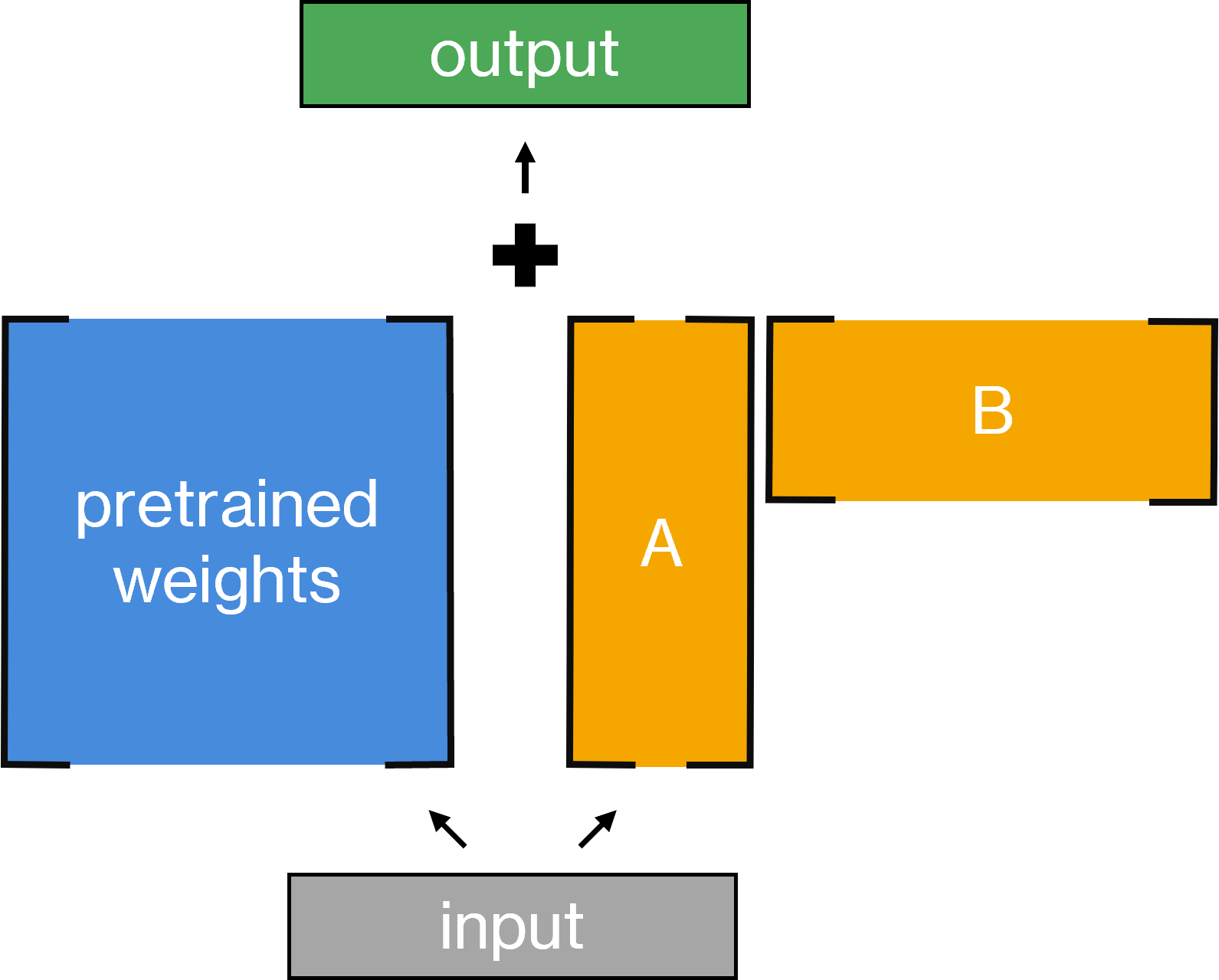

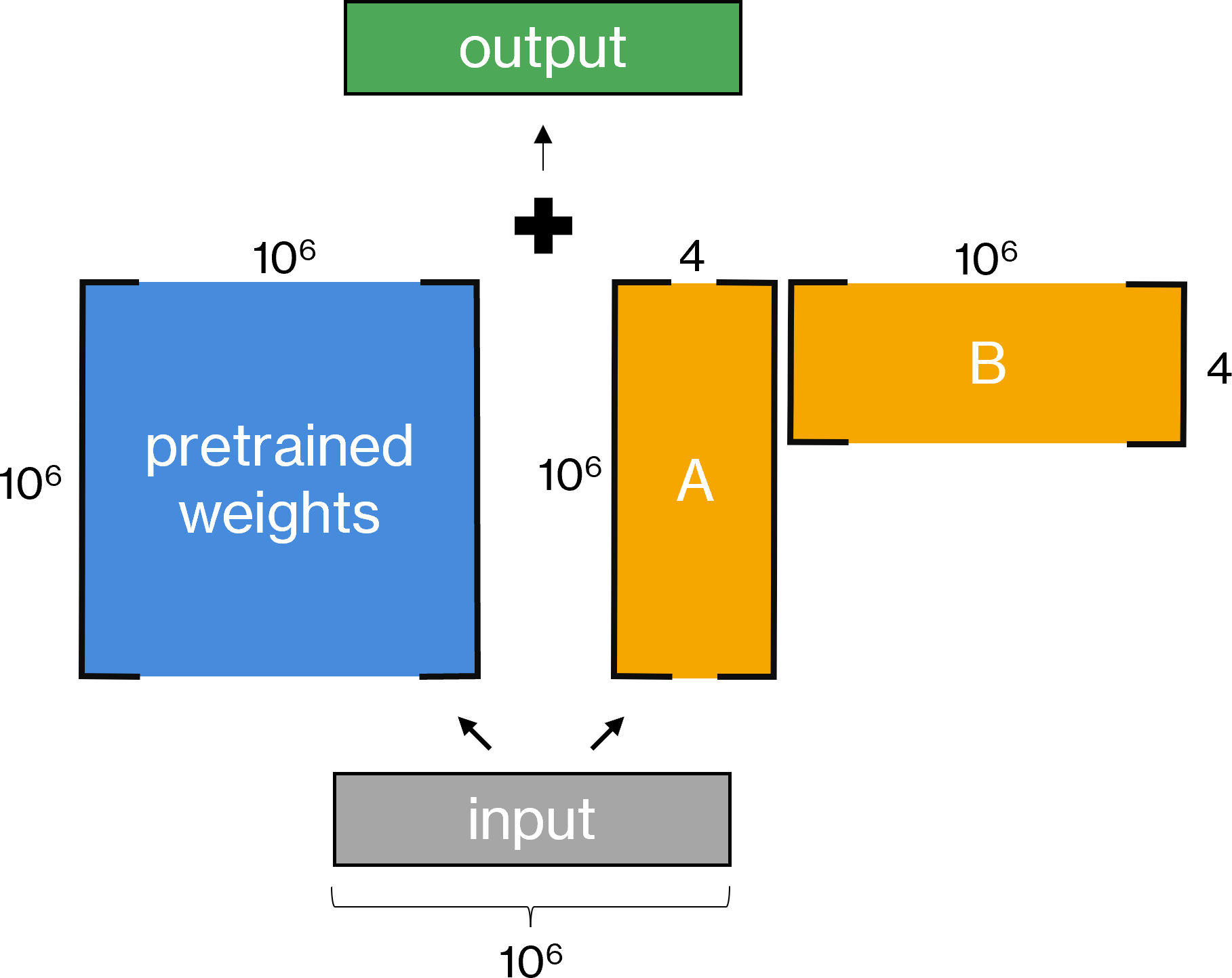

Functionality

Freezing the pretrained weights

Rank Decomposition

Advantages

Training a LoRA

Kohya_SS GUI

The most popular tool for training LoRAs seems to be the Kohya_SS GUI (bmaltais, 2024).

Preparing the data

For training a style LoRA, it is recommended to use between 10 and 100 images in that style. The images should be consistent in style, but vary in terms of depicted motives, colours, and viewing angles. Betina Gotzen-Beek has kindly sent me some of her illustrations to use for training (Aitrepreneur, 2023). For optimal results with Stable Diffusion, it is recommended to use a resolution of either 512x512 (SD 1.5) or 1024x1024 (SDXL) for training, although technically there is no limit on image resolution. As most of her images were much larger, we used multiple smaller excerpts from the original images. These were usually focused on characters as we wanted our LoRA to be able to reproduce characters in her style.

However, it is important to note that images alone are not enough for training. Each image also needs a caption describing its contents, so that the LoRA can learn which text prompt corresponds to which object in the image, and thus know what certain objects look like in the desired style.

Image captions can be created using BLIP captioning, which automatically creates a caption for each image. However, these captions must be manually reviewed and corrected as BLIP does not always identify objects correctly. Manually writing captions is very important as it also determines the LoRA’s focus. For instance, if the captions describe every detail of a character but not the background, generating images with the LoRA will provide great control over the character’s appearance but not over the background.

Important parameters

When training a LoRA, the first decision to make is which Stable Diffusion model to use as a base, as the LoRA will only function with that specific model. In this project, we tested two models: Stable Diffusion 1.5 and Stable Diffusion XL (SDXL).

Stable Diffusion 1.5 was released in October 2022 by Runway ML and is a very general-purpose model, meaning it can generate images of almost any object in varying styles. It is the default model when installing the Automatic1111 Web UI, which makes it a popular choice (Andrew, 2023).

Stable Diffusion XL is a larger version of SD 1.5 with 6.6 billion parameters (compared to 0.98 for Stable Diffusion 1.5). This allows for many improvements such as higher native resolution and image quality, as well as the generation of legible text (Andrew, 2023).

Assessing results

Evaluating a LoRA

To evaluate the quality of a LoRA, it is necessary to establish the criteria for a “good” LoRA. In the case of a style LoRA, its purpose is to accurately capture and reproduce the fine details of an artist’s style. Furthermore, the LoRA should be able to produce all kinds of images in this style, not just objects and scenes already drawn by that artist. A style LoRA is not useful if it can only generate images that already exist. This is called overfitting: a LoRA is trained on a specific dataset and adapts so closely to those specific images that it can only reproduce the images used in training and cannot generate new ones.

So how do you evaluate the quality of a LoRA?

Firstly, different LoRAs can be compared using some objective properties, namely the model size and its loss.

The model size gives an indication of how many weights from the original model were trained during the LoRA training. The larger the LoRA, the more weights have been trained. However, training more weights does not necessarily mean getting a better result, so the model size does not provide any insight into the quality of a LoRA.

Loss, however, is related to the quality of a LoRA as it gives an indication of how closely the images generated by the LoRA match those used in training. Still, this does not necessarily mean that a LoRA with a smaller loss produces better results: A loss of 0.0 would mean the LoRA can only reproduce the training images and is therefore overfitted. So, while you generally want to minimise the loss during training, it also shouldn’t be too small.

There is no value at which each and every LoRA works optimally, so the only reliable way to test a LoRA’s quality seems to be generating many images and visually comparing them with the style that should be replicated. This should be done keeping in mind the specific use case for which the LoRA was intended: In our case, the LoRA should generate images in the style of the artist Betina Gotzen-Beek and be suitable for use in children’s books. This means we only tested the generation of images with the same general subject matter as her images — for instance, there is no need to test the LoRA on its ability to generate horror scenarios.

Comparing different LoRAs

Overview

Our initial experiment “character1” used a dataset of 69 images and SD 1.5 as a base model. The training process consisted of 100 steps per image, resulting in a total of 6900 steps.

Although the generated images using this LoRA produced some satisfactory results, the style was inconsistent and often did not closely resemble the original images we were trying to imitate (refer to Figure 11).

To improve the results, we created a different training set containing only 44 images that were however much more diverse. The second trained LoRA “character2” was created using this dataset and SD 1.5 as well. Again, we used 100 steps per image, but this time using two epochs. Epochs are “one set of learning”, meaning that the process is repeated for as many times as there are epochs (bmaltais, 2023). In this case, using two epochs resulted in 8800 total training steps instead of 4400. This led to much better results and the ability to create much more diverse images with this LoRA.

Finally, we also tried using SDXL as a base model, as it generates images with higher resolutions and is better at generating hands and faces. This resulted in the LoRA “character2_sdxl” which again uses the second dataset and 100 steps per image. This time we also decided to train using five epochs to see how this affected the result, resulting in a total of 22000 steps.

| character1 | character2 | character3_sdxl | |

| base model | Stable Diffusion 1.5 | Stable Diffusion 1.5 | Stable Diffusion XL |

| number of images used in training | 69 | 44 | 44 |

| number of steps per image | 100 | 100 | 100 |

| number of epochs | 1 | 2 | 5 |

| number of total steps | 6900 | 8800 | 22000 |

| training duration | 1.418 hours (on a NVIDIA GeForce RTX 4080 Laptop with 12 GB VRAM) | 9.869 hours (on a NVIDIA GeForce RTX 4080 Laptop with 12 GB VRAM) | 13.220 hours (on a NVIDIA GeForce RTX 4090 with 24 GB VRAM) |

| training time per step | 0.740 seconds per step (on a NVIDIA GeForce RTX 4080 Laptop with 12 GB VRAM) | 4.037 seconds per step (on a NVIDIA GeForce RTX 4080 Laptop with 12 GB VRAM) | 2.163 seconds per step (on a NVIDIA GeForce RTX 4090 with 24 GB VRAM) |

| loss value at the end of training | 0.163 | 0.1001 | 0.1126 |

| LoRA size | 9.329 MB | 9.337 MB | 891.177 MB |

| results using the same prompt and seed (different resolutions since Stable Diffusion XL has a higher native resolution that Stable Diffusion 1.5) |  |  |  |

| full body shot of old man with white hair and beard wearing a santa outfit riding in a sleigh, snow on the ground, snow falling | |||

|  |  | |

| full body shot of a boy with short blonde hair sitting in a tree house, green leaves in background, fairy light in tree, light blue background | |||

Figure 11: comparing three different LoRAs

The detailed training settings for each LoRA can be found here:

character1

character2

character2_sdxl

Loss

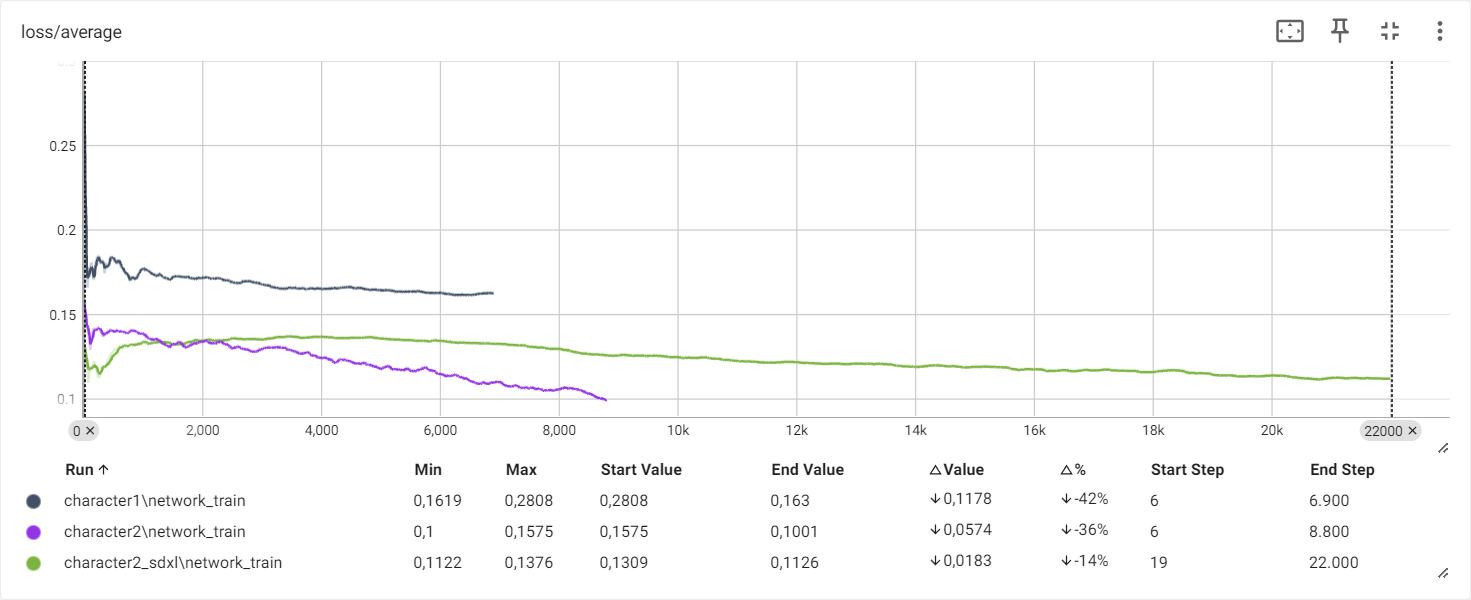

We tracked the loss of our LoRAs during training using TensorBoard.

Figure 12: comparing the average loss per step count for several LoRA trainings

Figure 12 displays the average loss per step count for the three LoRAs character1 (grey), character2 (purple) and character2_sdxl (green). Throughout all three training sessions, there was a decrease in the loss values, with the most notable improvement observed in character1. Despite this progress, it still has the highest loss among the compared LoRAs, while character2 has the lowest.

However, the figure above shows that the lowest loss value does not always indicate the LoRA that produces the best images. Notably, character2_sdxl still produces superior images which is due to the different base models used. Since Stable Diffusion XL is capable of producing images of much higher quality compared to Stable Diffusion 1.5, using it together with a LoRA also results in much better outcomes.

In the case of these three LoRAs, the one which was trained on Stable Diffusion XL also has a significantly larger file size.

When inspecting the graphs, the lines for character1 and character2_sdxl appear to plateau, suggesting that further training is unlikely to improve the result. Conversely, the loss value for character2 appears to continue to decrease, indicating that additional training could improve the result.

Generating images with LoRA

Using a LoRA to generate images does not change the workflow within Stable Diffusion. All prompts and settings can be used in the same way as without using a LoRA. The only difference is the presence of a special tag within the prompt window that specifies the LoRA to be used and the alpha value with which it influences image generation. Setting the alpha to 0 yields the same result as using only the original model without a LoRA while setting the alpha to 1 results in using the fully fine-tuned model (Ryu, 2023). This tag contains the keyword lora, the name of the LoRA and its alpha value separated by colons in angle brackets. For instance, <lora:character2_sdxl:0.7> specifies the LoRA named “character2_sdxl” with an alpha of 0.7. Multiple LoRAs can be used simultaneously using this principle.

After establishing that character2_sdxl consistently outperforms the other LoRAs, we tested various approaches for image generation within Stable Diffusion to identify the one generating optimal results.

Stable Diffusion Parameters

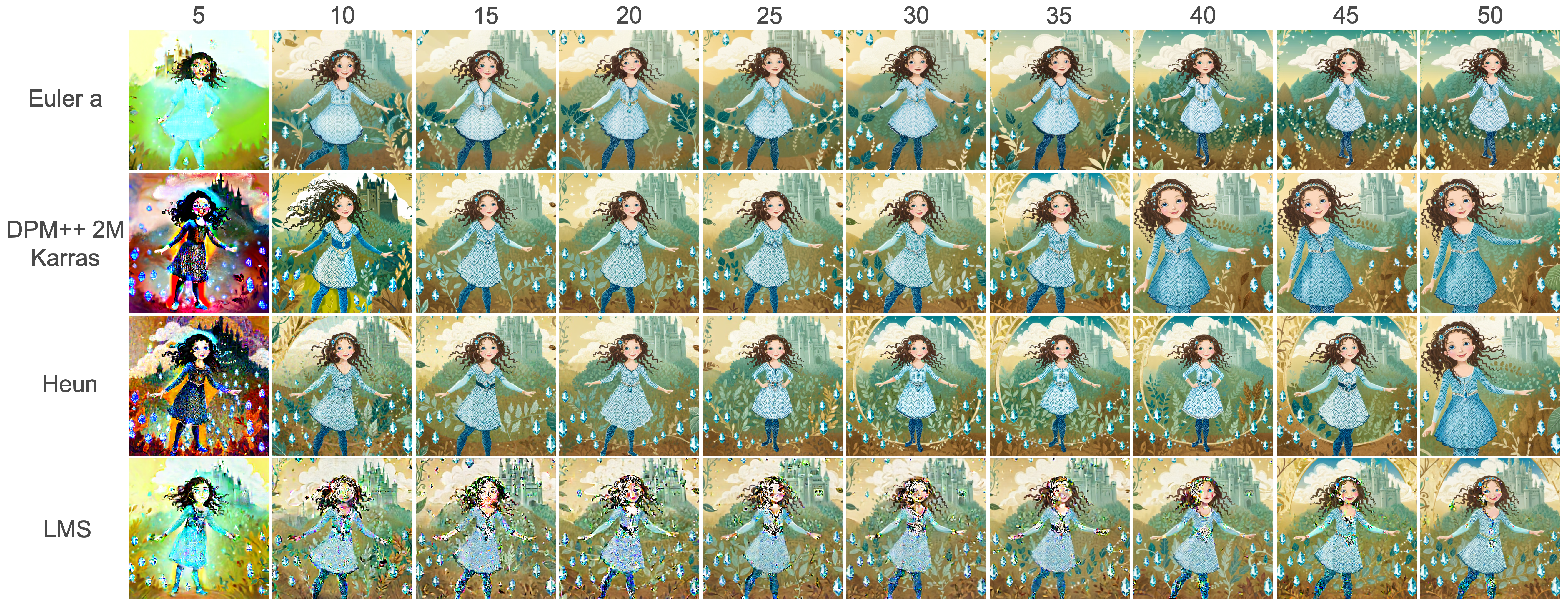

The LoRA was tested with different samplers to determine which one yields the best results. It is important to note that although the tested samplers produce different outputs, one is not necessarily superior to the others.

Some samplers are however better suited for specific use cases: As seen in Figure 13, Euler a produces good results with a relatively low step count. In the cases tested, 20 steps seem to produce the best output image. This is useful for general applications as a low step count also means a shorter generation time.

DPM++ 2M Karras and especially Heun on the other hand produce very different results depending on the step count, but generally need more steps than Euler a to produce good results. This can be useful if the user wants to generate several different images while only changing the step count and if generation time is not important.

Results produced using LMS as a sampler contain many noticeable artefacts using step counts up to 50 that were tested in this case.

Figure 13: comparing different samplers using the same seed for generation; LoRA scale 0.7; SDXL

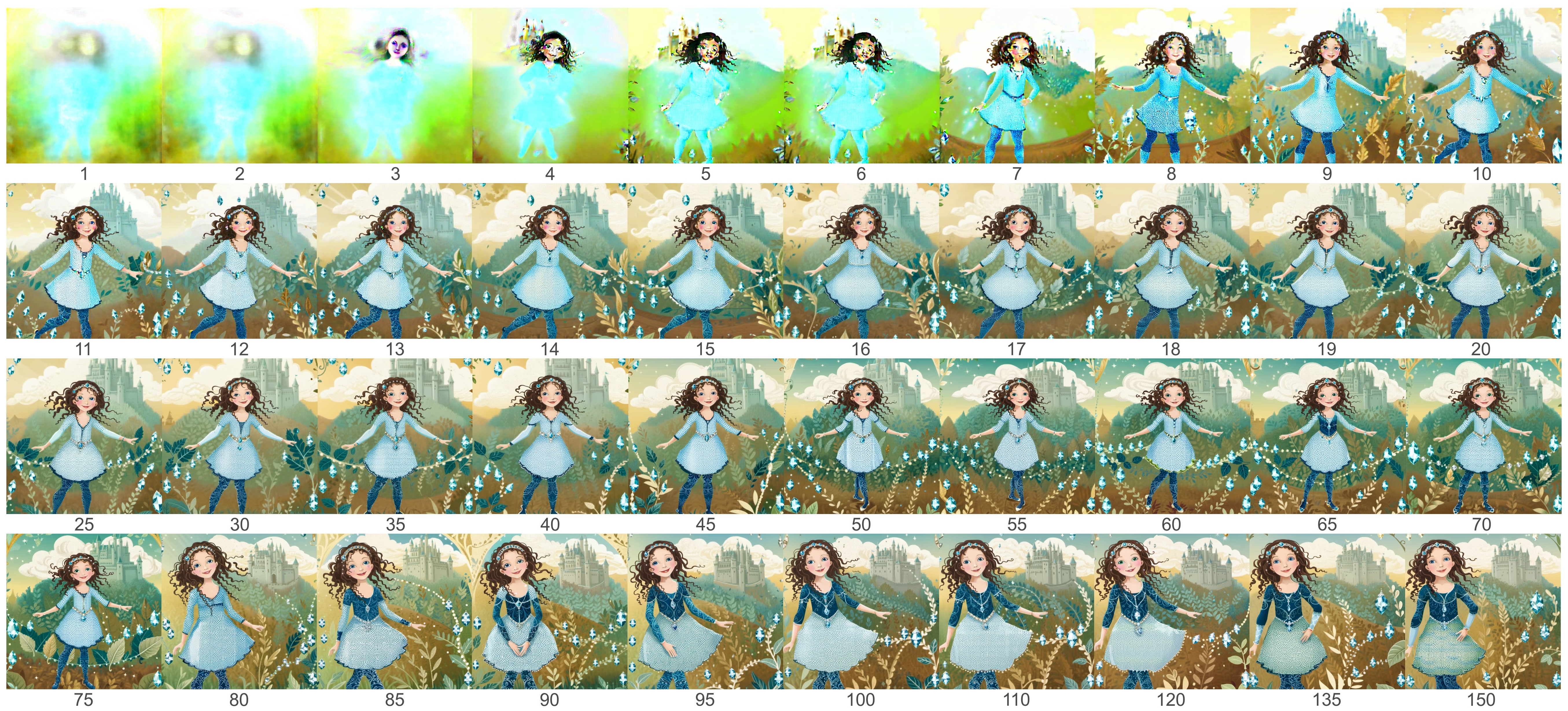

As mentioned above, the step count majorly influences the quality of the generated image. In general, lower step counts result in lower quality images, while the minimum step count that produces artefact-free results depends on the sampler used. For instance, 15 steps are sufficient when using Euler a or DPM++ 2M Karras while 20 or more steps are required when using Heun. Here we have tested different step counts ranging from 1 (minimum in Stable Diffusion) to 150 (maximum) using Euler a as the sampler.

Figure 14: comparing different step counts using Euler a as sampler

Using a step count lower than 15 will result in very blurred images. A step count of 15 or more usually produces high-quality results that do not improve drastically with step counts greater than 20. Using different step counts above 60 can produce different outputs in terms of content while the quality remains the same. For instance, using 80 steps will produce an image of a girl with a different pose and dress than using 90 steps.

Using a high step count and slightly adjusting it can therefore be used to generate different images. However, if this is the goal, it is faster to use Stable Diffusion’s batch feature without changing the step count. The batch feature produces multiple images using the same settings during one generation and only changes the seed for each image, resulting in multiple different images. This produces several results with lower step counts, which can be useful as high step counts also take a long time to generate.

To test exactly how the step count affects the generation time, we also compared the time taken to generate the images above. The images were generated using Stable Diffusion XL at a resolution of 1024x1024 on a GeForce RTX 4090.

| number of steps | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| time (seconds) | 1.6 | 1.8 | 2.2 | 2.5 | 2.8 | 2.9 | 3.5 | 3.7 | 4.1 | 4.4 | 4.7 | 5.1 | 5.4 | 5.5 | 5.9 | 6.1 | 6.6 | 6.8 | 7.1 | 7.5 |

| number of steps | 25 | 30 | 35 | 40 | 45 | 50 | 55 | 60 | 65 | 70 | 75 | 80 | 85 | 90 | 95 | 100 | 110 | 120 | 135 | 150 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| time (seconds) | 9.0 | 10.6 | 11.9 | 13.6 | 15.1 | 16.7 | 18.1 | 19.7 | 21.4 | 23.0 | 24.2 | 25.8 | 27.6 | 29.1 | 30.7 | 31.9 | 35.4 | 39.0 | 43.2 | 47.7 |

Figure 15: time taken for generating a 1024x1024 image using SDXL and different step counts on a GeForce RTX 4090

Figure 16: time taken for generation using different step counts

As shown in Figure 16 above, there is a linear relationship between the number of steps and the time required for generation. This suggests a consistent and proportional increase in generation time as the number of steps is increased.

Results

After identifying the parameters in Stable Diffusion that work best with our LoRA, we tested its limitations by generating images using only text prompts. There are two types of prompts within Stable Diffusion: Text prompts, which describe what the image should depict, and negative prompts, which specify what should not be included in the generated image.

Keywords such as “symmetrical face” and “symmetrical eyes” have been added to all the prompts below, as we found that it helps in creating more natural looking images.

For the same reason, we also used negative prompts such as “weird eyes”, “warped face”, “fused fingers” to prevent these phenomena from occurring.

|  |  |  |

| a girl with long brown hair floating from cloud to cloud | full body shot of a girl with long brown hair floating from cloud to cloud, blue background with white clouds, yellow stars, white sparkles | a girl with long brown hair wearing a yellow dress floating on clouds, blue background with white clouds, yellow stars, white sparkles | full body shot of a boy with short blonde hair sitting in tall grass, green fern and leaves, pink flowers, light blue background |

|  |  |  |

| full body shot of a boy with short blonde hair sitting in a tree house, green leaves in background, fairy light in tree, light blue background | full body shot of a boy with short dark hair sitting on a tree branch, green leaves in background, fairy light in tree, light blue background | full body shot of old man with white hair and beard wearing a santa outfit riding in a sleigh, snow on the ground, snow falling | full body shot of a cat lying in front of a fireplace, wooden floor |

Figure 17: images generated by Stable Diffusion using SDXL and character2_sdxl LoRA

The LoRAs created during the research for this paper can produce images that are indistinguishable from actual illustrations at first glance. Betina Gotzen-Beek herself states that the perspectives, facial expressions, and body shapes are exactly as she wants them to be. She also likes the plasticity and the placement of the shadows, as they are in places she would choose as well.

This might give the impression that anyone can produce high-quality art using the techniques proposed in this paper to create own images. However, there are some limitations.

Firstly, the LoRA can only produce images that contain elements present in the training data. For instance, Betina Gotzen-Beek notes that this image of a cat (Figure 18), and in particular the interior of the room, does not reflect her style and choice of colours at all — which is because the training data set did not contain any images depicting a similar scene.

Figure 18: image generated by Stable Diffusion using SDXL and character LoRA

Moreover, the brush strokes are not as precise and intentional as they would be in a real painting. Additionally, there are still issues with eyes, hands, and feet. Often, there are oddly shaped or asymmetrical eyes, too many or fused fingers, or missing feet.

|  |  |

Figure 19: excerpts of images generated by Stable Diffusion using SDXL and character LoRA, upscaled using R-ESRGAN 4x+

All of these issues require manual correction but can be easily resolved. Simply correcting the hands and brush strokes in an image is still significantly less work than creating an entire illustration from scratch.

However, one major problem remains: Stable Diffusion does not provide much control over the image it generates. Prompts can be used to specify the desired image, but prepositions such as “left”, “right”, “up” or “down” do not have the desired effect of allowing the user to specify where objects should be placed.

Similarly, when generating images, most results are also unsatisfactory, with only a portion of the generated images meeting the desired standard.

We have found that the best method is to generate multiple images using the same settings by increasing the batch count within Stable Diffusion and then selecting the best result from the generated images.

The images above were generated using a batch count of four and picking the best image from the four generated. Still, not all of the images accurately represent what was described in the text prompt.

Generating a large number of images and refining the prompt between image generations can produce a satisfactory image, but this process can be time-consuming and unreliable.

ControlNet

To gain more control over image generation, we also tested the ControlNet extension to the Automatic1111 Web UI ( Mikubill, 2024). This extension offers several additional inputs that influence the image generation based on the selected control type. Since the goal of this project is to determine the best method for artists in order to create illustrations using Stable Diffusion, we wanted to ideally use a sketch as input and have Stable Diffusion create a finished illustration based on that. The two control types that seemed most promising for this use case were the Scribble/Sketch control type and the Canny control type.

Scribble/Sketch

The Scribble/Sketch control type enables the user to input a simple sketch that do not need to be very detailed or precise, as this sketch will be used as a very rough guide during image generation in addition to a text prompt. This allows the user to influence the image composition while still producing vastly different results with each generation, which can be useful when generating images based on a rough idea or when brainstorming. However, it does not grant much more control than using a text prompt alone, and it may not be very useful for artists who can express their concrete ideas through a detailed sketch.

Figure 20: simple sketch used as input for ControlNet

|  |

|  |

Figure 21: images generated using ControlNet Scribble/Sketch

text prompt: boy with short red hair holding a red balloon floating, normal face, green background with leaves

negative prompt: weird hands, too many limbs, merged fingers, multiple balloons

Canny

The next method we tested is using the Canny edge detection algorithm. This algorithm takes an image as input and generates a monochrome image containing all edges that were detected in the original image in white on a black background.

Figure 22: image used as input for the Canny algorithm |  Figure 23: edge image generated from input image |

These edges are then used as guides by Stable Diffusion during image generation. This means that very specific images can be generated by inputting an image to be used as a guide. This technique allows great control over the subject of the image, while additional factors such as colours, lighting, mood, and backgrounds can be changed using text prompts.

|  |  |

| prompt: girl with blonde hair holding a black cat | prompt: girl with red hair holding a grey cat, green background, muted colours | prompt: girl with blonde hair holding a pink cat |

|  |  |

| prompt: girl with black hair holding a black cat in the rain, blue background | prompt: girl holding cat at sunset, golden hour lighting | prompt: girl holding cat during a storm, lightning in background |

Figure 24: image variations created using SDXL, ControlNet Canny and a text prompt

Using an existing image to create an edge image and using this as input works well to alter aspects of the image, such as colours, lighting, or mood. Therefore, it is important to carefully consider the input image or sketch to ensure the desired outcome is achieved. However, there are occasional issues with lines or areas of an object not being recognised as part of the same object. For instance, the cat’s lower body is often missing under the girls’ hands.

Using a sketch as input

Instead of transforming an existing illustration into an edge image for image generation, a sketch with clear outlines can be used as a guiding input. This approach allows artists to simply draw a sketch and have Stable Diffusion generate the fully illustrated image. This method was tested using the following drawing as input: Figure 25: line art drawing used as input for ControlNet Canny

This produced the following results:

|  |

| prompt: girl with blonde hair holding a black cat | prompt: girl with brown hair holding a grey cat |

|  |

| prompt: girl with blue hair holding a black cat in the rain | prompt: girl with blonde hair holding a black cat in the rain, blue background |

Figure 26: image variations created using SDXL, ControlNet Canny and a text prompt

Using a sketch as input worked relatively well in producing images that were clearly based on that sketch. However, the overall image quality was lower compared to using an illustration and an edge image based on it. The image style was not as close to the original artist’s style, and the images appeared somewhat blurred and lacked detail, likely due to the less detailed input sketch.

Additionally, this particular sketch appears to be overly complex. Many of the generated images are incomplete, with the cat’s lower body missing or the girl’s sleeves drawn incorrectly. In some cases, the cat is even placed in an entirely different location. Figure 27 shows some examples of undesirable results:

|  |  |

Figure 27: image variations created using SDXL, ControlNet Canny and the following text prompt:\n girl with blonde hair holding a black cat

Using a real image as input

Finally, we also tested using a photograph as an input to ControlNet Canny. This would significantly reduce the time taken to create a finished illustration significantly, as there would be no need to manually draw an input image. However, it also offers less creative freedom, as the photo has to closely match the desired final result.

Figure 28: photograph used as input for the Canny algorithm |  Figure 29: edge image generated from input image |

|  |

| prompt: girl on a skateboard | prompt: girl with blue helmet and yellow jacket on a skateboard |

|  |

| prompt: girl with blue helmet and yellow jacket on a skateboard | prompt: girl with blue helmet and yellow jacket on a skateboard |

Figure 30: image variations created using SDXL, ControlNet Canny and a text prompt

The method used produced lower quality results compared to images generated without ControlNet or when using an illustration as input to the Canny control type.

Additionally, there are problems with accurately matching an object to a colour specified in the prompt. Despite using the same prompt for three of the images above, the helmet is sometimes yellow and the jacket is blue, when it should be the opposite.

Furthermore, the photos must closely resemble the generated images, as every detail is used. This can be especially challenging when depicting people, as their proportions often differ from the artist’s style. Even when using a photo of a child, as in this example, the resulting generated image may not accurately reflect the desired proportions. Therefore, this method may be useful for backgrounds or images with a more realistic style, but it may not be suitable for creating finished illustrations in the particular style used for this project.

Conclusion

In this paper we have proposed a workflow for generating images in a specific art style using Stable Diffusion, Low-Rank Adaptation and ControlNet. Stable Diffusion is used for image generation, which is fine-tuned using Low-Rank Adaption to create images in a specific style. Finally, ControlNet is used to generate images based on a pre-existing illustration or a sketch, providing more control over the generated image.

This technique can produce convincing and consistent results that closely resemble human art. Betina Gotzen-Beek herself is impressed with the similarity between the generated images and her own style. She also says she can use the generated repeating and large-scale patterns for backgrounds to save time when drawing.

There are some minor issues with characters, such as oddly shaped fingers or eyes, that require manual correction. However, this still saves a significant amount of time compared to creating an entire illustration from scratch. The main issue, however, is the lack of control over the generation process. Even when using ControlNet, the AI struggles with complex motifs and identifying connected objects. This requires the generation of multiple images while refining the input parameters to achieve the desired result, which can be time-consuming.

Although this workflow is not suitable for complicated images, it is applicable to simpler images and backgrounds and can save artists a lot of time.

As AI technologies are further developed and improved, they may soon be able to generate very complicated and intricate illustrations, or even entire panel sequences, as seen in comics. The significant improvements from Stable Diffusion 1.5 to Stable Diffusion XL show that this could happen in the near future.

References

Aitrepreneur. (2023, February 3). ULTIMATE FREE LORA Training In Stable Diffusion! Less Than 7GB VRAM!. https://www.youtube.com/watch?v=70H03cv57-o\ (accessed: 17 November 2023)

Andrew. (2024, January 4). How does Stable Diffusion work?. Stable Diffusion Art https://stable-diffusion-art.com/how-stable-diffusion-work/#How_training_is_done (accessed: 10 February 2024)

Andrew. (2023, December 5). Stable Diffusion Models: a beginner's guide. Stable Diffusion Art https://stable-diffusion-art.com/models/ (accessed: 10 February 2024)

Andrew. (2023, November 17). Stable Diffusion XL 1.0 model. Stable Diffusion Art https://stable-diffusion-art.com/sdxl-model/ (accessed: 10 February 2024)

Andrew. (2023, November 22). What are LoRA models and how to use them in AUTOMATIC1111. Stable Diffusion Art https://stable-diffusion-art.com/lora/ (accessed: 10 February 2024)

AUTOMATIC1111. (2022). Stable Diffusion Web UI [Computer software]. commit cf2772fab0af5573da775e7437e6acdca424f26e. https://github.com/AUTOMATIC1111/stable-diffusion-webui

bmaltais. (2024). kohya_ss [Computer software]. commit 89cfc468e1afbee2729201f1ddeaf74016606384. https://github.com/bmaltais/kohya_ss.git

bmaltais. (2023, July 11). LoRA training parameters. https://github.com/bmaltais/kohya_ss/wiki/LoRA-training-parameters

Hu, E., Shen, Y., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., Wang, L., Chen, W. (2021 June 17). LoRA: Low-Rank Adaptation of Large Language Models. https://arxiv.org/abs/2106.09685 (accessed: 7 February 2024)

Mikubill. (2024). sd-webui-controlnet [Computer software]. commit a5b3fa931fe8d3f18ce372a7bb1a692905d3affc. https://github.com/Mikubill/sd-webui-controlnet

Ortiz, S. (2024, February 5). The best AI image generators to try right now. ZDNET. https://www.zdnet.com/article/best-ai-image-generator/ (accessed: 8 February 2024)

Palmer, R. [@arvalis]. (2022, August 14). What makes this AI different is that it's explicitly trained on current working artists. You can see below that the AI generated image(left) even tried to recreate the artist's logo of the artist it ripped off. This thing wants our jobs, its actively anti-artist.[Tweet]. Twitter. https://twitter.com/arvalis/status/1558623545374023680 (accessed: 7 February 2024)

Randolph, E., Biffot-Lacut, S. (2023, June 12). Takashi Murakami loves and fears AI. The Japan Times. https://www.japantimes.co.jp/culture/2023/06/12/arts/takashi-murakami-loves-fears-ai/ (accessed: 7 February 2024)

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B. (2022 June) High-Resolution Image Synthesis With Latent Diffusion Models. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://huggingface.co/CompVis/stable-diffusion-v1-1 (accessed: 10 February 2024)

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B. (2021, December 20). High-Resolution Image Synthesis with Latent Diffusion Models. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://arxiv.org/abs/2112.10752 (accessed: 10 February 2024)

Roose, K. (2022, September 2). An A.I.-Generated Picture Won an Art Prize. Artists Aren't Happy. The New York Times. https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html (accessed: 7 February 2024)

Ryu, S. (2023). lora [Computer software]. commit bdd51b04c49fa90a88919a19850ec3b4cf3c5ecd. https://github.com/cloneofsimo/lora

Stability AI. (2023, March 25). stablediffusion [Computer software]. commit cf1d67a6fd5ea1aa600c4df58e5b47da45f6bdbf. https://github.com/Stability-AI/stablediffusion

Stable Diffusion Web (2024). Stable Diffusion Online. https://stablediffusionweb.com/ (accessed: 10 February 2024)

Tremayne-Pengelly, A. (2023, June 21). Will A.I. Replace Artists? Some Art Insiders Think So. Observer. https://observer.com/2023/06/will-a-i-replace-artists-some-art-insiders-think-so/ (accessed: 7 February 2024)

Notes

AI tools, namely ChatGPT 3.5 and DeepL, were used to assist with grammar and spelling corrections. These tools were employed to enhance the accuracy and clarity of the text.

All images presented in this paper were created by the author.